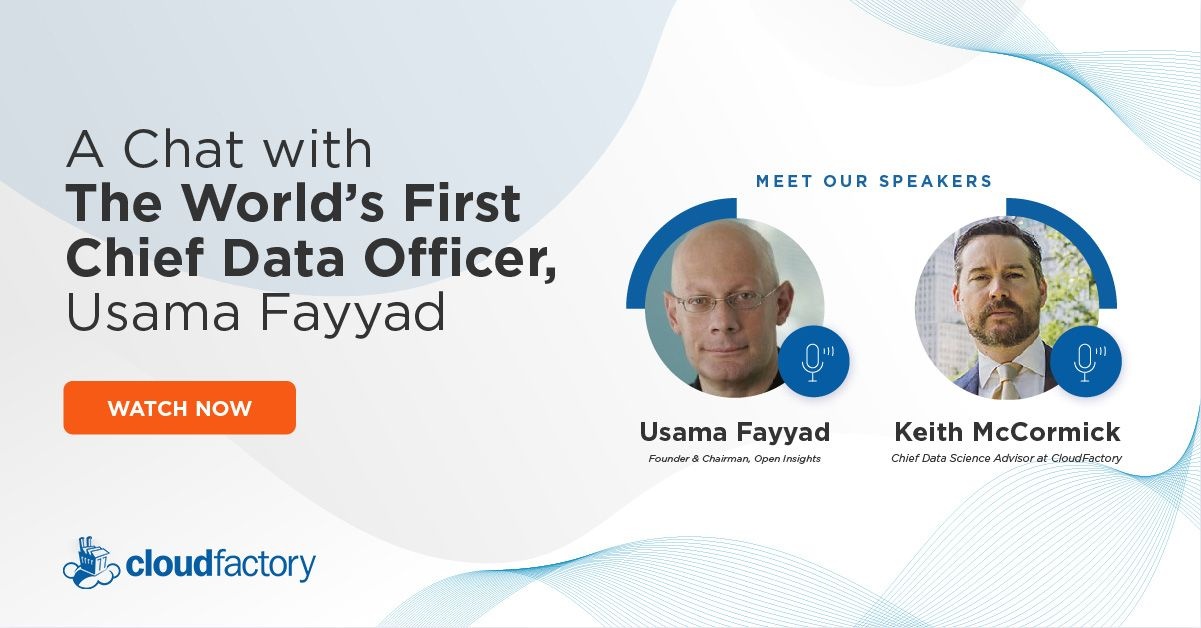

In our latest LinkedIn Live event, CloudFactory Chief Data Science Advisor Keith McCormick spoke with Usama Fayyad, a data science icon whose work has been advancing AI for three decades. Fayyad was recently named the inaugural executive director of the Institute for Experiential Artificial Intelligence at Northeastern University. Together, we explored the state of AI and the importance of integrating human intervention with automation. Here are some key insights from the session.

Experiential AI sits at the intersection of humans in the loop (HITL) and artificial intelligence. At its roots, experiential AI is application-driven, human-centric AI: It places human abilities, skills, and intelligence at the forefront of the development of artificial intelligence.

Here’s our take on what this intersection means for the future of AI.

1. Data Scientists Will Need New Skill Sets

Typically, a data scientist graduates with basic knowledge of concepts like algorithms and machine learning. From there, however, they’re expected to learn on the job. The skills they build are dependent on their mentors, their coworkers, and the work they’re exposed to.

The Institute for Experiential Artificial Intelligence is helping to fill the education gap in the world of data science by creating courses and learning programs that are very much like medical residency programs: They teach data scientists what they need to know by allowing them to work side by side with professionals.

At Northeastern University’s Institute for Experiential Artificial Intelligence, Inaugural Executive Director Usama Fayyad is creating a solutions hub made up of data scientists, data engineers, and programmers—along with Northeastern faculty, postdocs, and research staff—who will work closely with organizations, government agencies, and public datasets to solve real problems with real data.

The goal is to produce data scientists who not only understand the science of the field, but also the art of the field. That way, they can bring value to organizations right away by understanding how to massage and adjust algorithms to glean powerful information.

“Nobody has standards and definitions for what a data scientist should know and be expected to do. Employers, candidates, and educational institutions are confused. The missing ingredient in the education of a data scientist is supervised, apprenticeship-based learning.” —Usama Fayyad

2. AI Will Extend Human Capabilities—Not Replace Them

When it comes to AI, data isn’t useful until it’s structured, labeled reliably, and integrated with machine learning to solve problems. Experiential AI goes beyond data generation to understand what the data is—and how to label it correctly so that machine learning algorithms can operate correctly and with greater transparency.

Experiential AI also understands that algorithms can’t operate in a silo; they can sometimes go awry when they encounter data they’ve never seen before. It’s at these key intersections where human intervention is necessary in order to identify and explain the context and guide next steps.

Instead of replacing a human with a robot, experiential AI is about extending human capabilities: leveraging the natural strengths of humans that can’t be emulated in machines (such as common-sense reasoning and decision-making amid uncertainty) and deploying robots in situations where humans are naturally weak (reliable precision, consistency, etc.).

3. Humans & Algorithms Need to Work Together

Radiology is an excellent example of why humans should be integrated into AI decision-making. Digital X-rays capture data during the patient examination and transmit this information to a human radiologist who spends 10 to 15 minutes reviewing before offering an interpretation. From there, a physician analyzes the readout and then communicates the results to the patient while guiding him or her down the right treatment path.

With millions of X-rays being taken each year, could we apply algorithms to attempt to learn from the vast amount of X-ray data gathered over the years? Can AI identify regions of interest within an X-ray image and provide an accurate readout?

While it’s likely that AI may provide accurate information most of the time, diagnosis involves human lives and health. Humans can step in and quickly verify whether the machine’s readout was right or wrong. This not only guarantees quality to make sure incorrect information isn’t reported back to the provider or patient, but also helps make adjustments to algorithms so they can “learn” from their mistakes.

This approach lets a machine do what it does best—analyze thousands of images to consistently find anomalies without getting tired—while also making sure that a radiologist applies his or her knowledge to make sure nothing is missed or misdiagnosed. The next time the machine analyzes an X-ray, it will have learned from the double-check provided by a human in the loop to achieve desired results.

“We have not solved any problem using AI the same way that a human brain solves it. We have found shortcuts, we have found ways around it, and we have found ways to estimate it. But it shouldn't discourage us. It takes a long time to understand these complex phenomena.” —Usama Fayyad

Learn even more about the state of AI by watching the full on-demand video of the chat between Keith and Usama. To participate in future events, please follow CloudFactory on LinkedIn.

Data Science Data Labeling ML Models AI & Machine Learning Events