We've yet to meet an ML engineer who backs away from a challenge. In a world where ML solution development is becoming increasingly complex and cutthroat, ML engineers are determined to find a way to stay ahead of the curve.

They know the key to success is ruthlessly automating as much of their workflow as possible. This allows them to focus on more strategic work and deliver solutions that meet their desired performance metrics more quickly and cost-effectively.

Imagine if they could do this up to 5x faster?

Foundation models may be the answer.

What are foundation models?

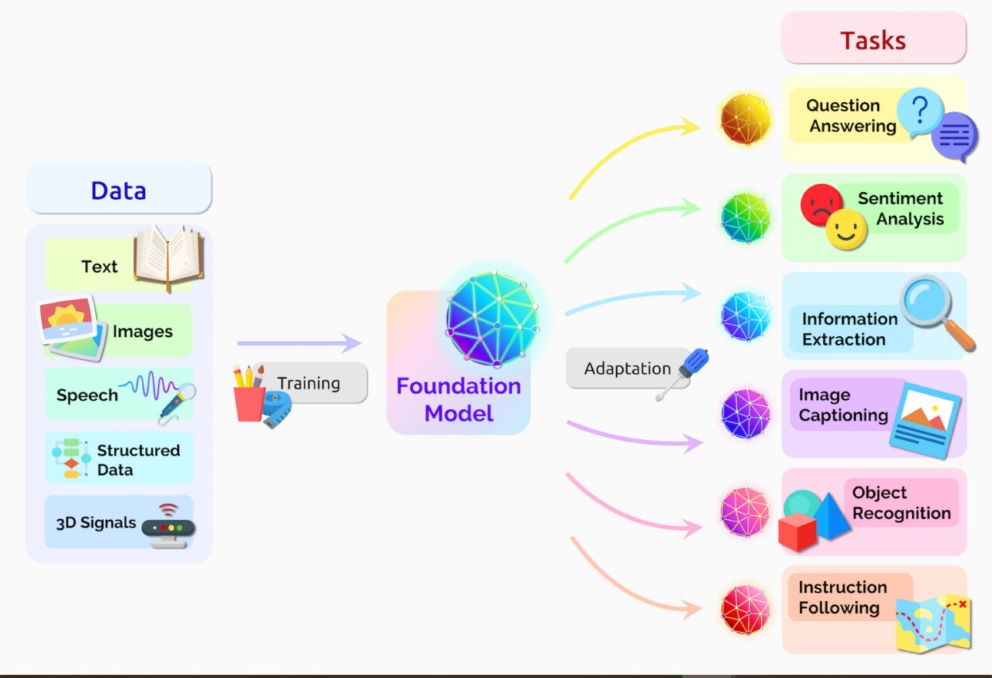

A foundation model is a large, pre-trained model that can be adapted to various downstream tasks. Foundation models are typically trained on massive datasets of unlabeled data, which allows them to learn general-purpose representations of the world. These representations can then be fine-tuned on smaller datasets of labeled data to perform specific tasks, such as image classification, natural language processing, or machine translation.

Foundation models can save you a significant amount of time and effort, and they can also lead to better model metric values. Gaining an advantage in precision score or hamming score is a strong bonus.

Foundation models are large, versatile AI models that can be trained on various data modalities, such as text, images, and code. Once trained, foundation models can be adapted to perform various downstream tasks, such as translation, summarization, and question-answering—source: ResearchGate.

CloudFactory has integrated the best parts of some of the most popular foundation models into Accelerated Annotation, our best-in-class data labeling and workflow solution.

You're likely familiar with some of the renowned foundation models that have gained significant recognition in the field, such as:

BERT: A linguistic wizard. A language model that learns to represent words and phrases in a way that captures their meaning and context.

GPT: A master of language. A language model that can generate text, translate languages, write different kinds of creative content, and answer your questions informally.

DALL·E: A visionary artist. A model that can generate images from text descriptions.

Stable Diffusion: A painter's palette. A model that can generate images from text descriptions, in-paint missing portions of pictures, and extend an image beyond its original borders. Segment Anything Model (SAM): An instance segmentation master. A model that can "cut out" any object, in any image, in a single click.

Why should you use foundation models for Vision AI projects?

- You'll save money and time. When tweaking a model for a specific task, developers only need to train the model's head, which is much faster and cheaper than training the entire model from scratch.

- You'll get better performance. The foundation model provides a strong base for the fine-tuned model, which can lead to better performance on the customer's use case.

- You'll have more flexibility. Foundation models can be fine-tuned for various use cases, giving customers more flexibility in how they use Vision AI.

Step-by-step outline of CloudFactory's foundation model approach for Vision AI projects

Step 1. We select the latest foundation models that are available to the ML community. Once we have selected a model, we design and train a neural network to extract valuable features from many images. We prefer to select foundation models that are built using a self-supervised approach, as this can provide even more immediate value.

Step 2. We use transfer learning to fine-tune the foundation model's head for the client’s specific use case. CloudFactory's Accelerated Annotation trains the model on a small sample of the customer's dataset (as little as ten images) to learn how to transform the extracted features into the correct predictions.

Step 3. We use the fine-tuned model to automate tasks. Accelerated Annotation's AI assistant can be retrained to ensure continuous quality improvement as the project progresses.

If you're building your annotation strategy and need greater detail about Vision AI decision points, download our comprehensive white paper, Accelerating Data Labeling: A Comprehensive Review of Automated Techniques. You won't be disappointed.