Executive Summary

The AI lifecycle moves from proof of concept to proof of scale, then to model in production. This is a cyclical process, and people play an important role. Humans in the loop (HITL) inspect, validate, and make changes to algorithms to improve outcomes. They also collect, label, and conduct quality control (QC) on data. The benefits of humans in the loop begin with model development and extend across the AI lifecycle, from proof of concept to model in production.

- Case study 1: A managed team builds a dataset of images to accelerate model development.

- Case study 2: Workforce teams label and check the quality of data annotations that had required farming expertise.

- Case study 3: Data analyst teams resolve exceptions on sports player analytics.

Poor utilization of people in the AI lifecycle is costly and yields low-quality data and poor model performance. It is best to consider workforce early in the AI lifecycle, and before model development begins, to achieve higher quality outcomes.

Read the full guide below, or download a PDF version of the guide you can reference later.

The AI Lifecycle Challenge

Building any solution that applies artificial intelligence (AI) means the journey will be perilous. The development team is among a sea of protagonists, most of whom will fail in their quest to use machine learning to solve a problem, launch a product, or transform an industry.

They will encounter many challenges: data scarcity, dirty data, workforce burdens, algorithm and quality control (QC) failures, and more. At every stage of the AI lifecycle, they will make decisions about their data and their workforce that will determine success or failure. Many of those choices will dictate their ability to quickly make changes in their process that could improve model performance.

Innovators have applied external workforces and technology to tackle these challenges and accelerate the AI lifecycle. Some have attempted to speed data processing at scale with crowdsourcing or automation, both of which require in-house staff and ongoing management. Others have used traditional business process outsourcing (BPO) providers. Within the last decade, managed outsourced teams have emerged.

No matter the approach, how development teams deploy people throughout the AI lifecycle is an important decision with far-reaching consequences. Knowing when and where to do so is key to reducing the number of failed AI projects and the time that is wasted on them.

In this guide, we share what we have learned from annotating data for hundreds of AI projects, from computer vision to natural language processing (NLP). To learn about how to design a human-in-the-loop workforce to build and maintain high-performing machine learning models, read on.

If you are interested to speak with a workforce expert now, get in touch.

Human-in-the-Loop Machine Learning (HITL)

The term “human in the loop” originated to describe any model that requires human interaction. Where the human-in-the-loop (HITL) approach is used in real time and models are continually trained and calibrated, people are essential to achieving desired results. Examples include chatbots and recommendation engines.

Similarly, human-in-the-loop machine learning refers to the stages of the model development process that require a person to inspect, validate, or change some part of the process to train and deploy a model into production. An example is when a data engineer verifies a machine learning model's predictions before moving it to the next stage of development.

HITL also can extend to the people who prepare and structure data for machine learning.

In fact, human-in-the-loop machine learning includes all of the people who work with data that will be used, including the people who collect, label, and conduct quality control (QC) on data.

Click To TweetHumans in the Loop: Data Preparation and Quality

There is a lot of thankless work that goes into the creation of a dataset, whether it will be used to train a model or to maintain one in production. Data drives the model development process, and working with data requires people.

Indeed, people are involved across the AI lifecycle, from exploratory data analysis to machine learning production. About 80% of total project time is consumed with data preparation, labeling, and processing, and the majority of that work is done by people, according to analyst firm Cognilytica.

Quality is an important factor because a machine learning model is only as good as the data that trains and retrains it. Quality data is the key to creating high-performance AI. Traditionally, scaling a people operation for this kind of work was difficult, so many AI project teams turned to crowdsourcing. This approach gave them access to a large number of workers, which provided one element of the required scale.

But data quality often suffered because workers were anonymous and changed over time. The resulting lack of accountability and workers’ unreliable communication with the AI development team stalled development and became costly, creating burdens on valuable internal resources and causing data annotation rework, low quality data, and poor model performance.

Poor utilization of people across the AI lifecycle - starting with the model development process - can lead to poor quality data, higher costs, and model failure.

Click To TweetThe majority (96%) of AI project failures occur midway through the model development process, due to poor data quality, labeling, or modeling, according to Dimensional Research.

Today, many organizations understand how people are involved in data labeling. Yet, many haven’t yet leveraged the benefits of human-in-the-loop machine learning across the AI lifecycle.

Deploying people strategically at other stages in the model development process, from testing to model maintenance and optimization, can improve models and their outcomes. Specifically, a trained and managed workforce can speed the time to market and reduce the burden of data work on internal resources.

The benefits of humans in the loop extend across the AI model development process:

- Continuous improvement of machine learning and deep learning models requires people for data labeling, quality control (QC), and modeling.

- Automation can speed the development process but it requires people to build, maintain, and monitor exceptions.

- People design the processes that integrate an AI solution into an organization.

- When AI models fail, people must step in to mitigate risk and resolve problems.

Accelerating Model Development with Human in the Loop

In the model development process, there are seven key areas where a human in the loop can make a significant impact. These areas are represented by the blue boxes in the image. In this context, human in the loop refers specifically to a data workforce, acknowledging the enormous amount of work performed by data scientists and engineers beyond the scope of this simplified process.

Many teams outsource data gathering, cleaning, and annotation so they can assign data scientists and other high-cost talent to projects that require more advanced skills. However, people are helpful far beyond these stages in the process. Teams can strategically deploy a managed workforce at every phase of model development to scale and accelerate the process.

Automation is helpful, and the optimal approach is blending it with people. When applying automation, it is important to consider how much time it will save - and cost, in terms of maintaining the tools, managing the people who use them, and monitoring for exceptions. Let’s take a closer look at how people are involved, and where they can be more strategically applied to accelerate the process.

HITL AND THE AI MODEL DEVELOPMENT PROCESS

In the model development process, there are seven key areas where a human in the loop can make a significant impact. These areas are represented by the blue boxes.

Design and Build

Exponential amounts of quality data are required for peak algorithm performance. In fact, 80% of AI project time is spent in this phase of the model development process, including data acquisition, cleaning, and annotation. A human-in-the-loop workforce can complete much of the day-to-day data work that is required during these early, critical stages of deployment.

Whether labels are applied using manual or automated means, a human-in-the-loop workforce can be used to acquire and prepare data for annotation. With data cleansing and enrichment in particular, subjectivity and understanding of edge cases are important so this nuanced work can be completed accurately.

A human workforce plays a critical role in the acquisition and cleansing process, especially in cases where web scraping or data enrichment is involved, to ensure that only the most relevant, complete data is applied to the project.

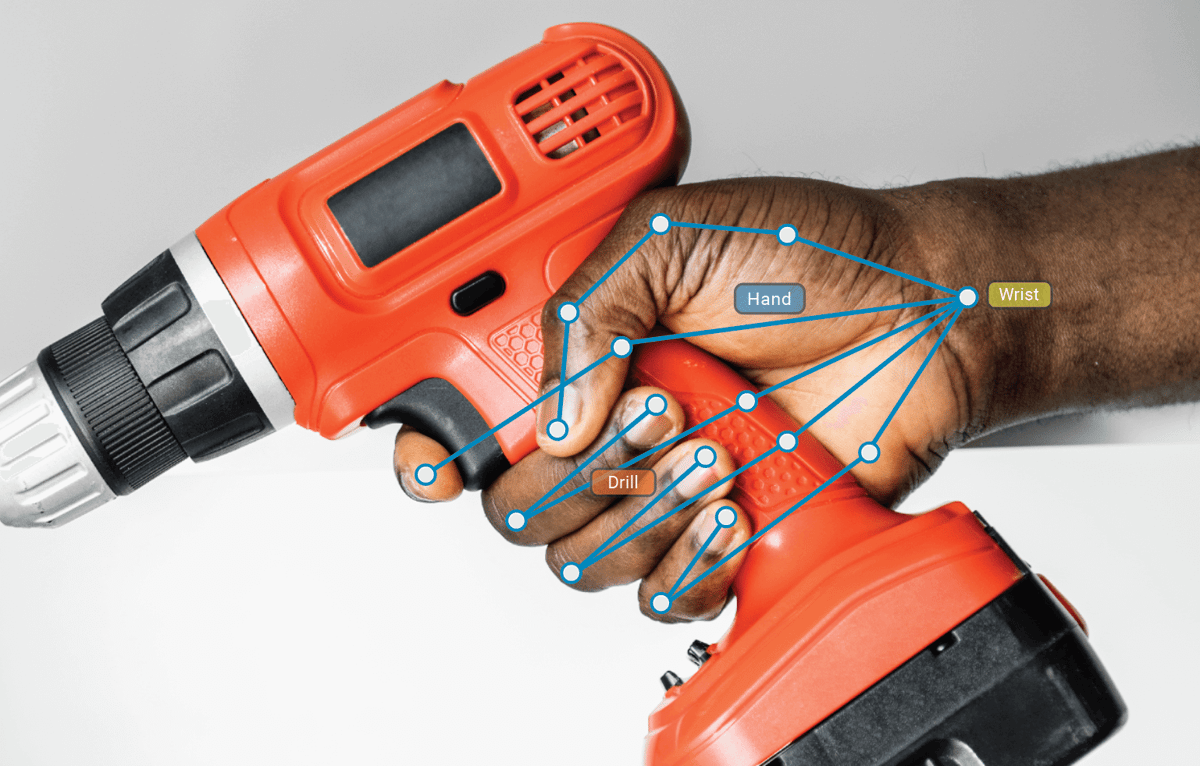

A company was using computer vision to build analytics solutions and needed to acquire a dataset of objects being held by human hands.

CASE STUDY 1:

Data Acquisition and Cleansing

A company uses computer vision to build analytics solutions that transform video into reliable information for their clients. They needed to acquire a dataset of images to train their algorithm to identify different objects being held by human hands. Given the unique requirements for the data needed, the company turned to CloudFactory’s managed team to build a dataset of hundreds of thousands of images to their exact specifications. This helped them minimize the strain on their in-house development team and accelerate model development.

Deploy and Operationalize

This next phase of the model development process is where AI projects are put to the test and where most failures occur. As 80% of project time is spent in the first phase, it can be frustrating that 96% of failures won’t happen until they get to this point in the process. Innovators are turning to a human-in-the-loop workforce to avoid the pitfalls of poor data quality and the unreliable models that result.

Manual data labeling is the most readily accepted use for a human-in-the-loop workforce but an HITL workforce also can be used to conduct QC on data that has been auto-labeled. Auto-labeling is a feature found in data annotation tools that use AI to pre-label a dataset. These tools can augment the work of humans in the loop to save time and lower costs.

To achieve the highest quality results, auto-labeling should be paired with an HITL workforce to ensure the automation is performing as anticipated. By the same token, during this phase HITL workforces can be deployed to validate the outputs of the model itself, determining model accuracy and identifying areas for improvement.

An AgTech company uses imagery from drones and satellites to provide farmers with crop analytics to help them increase their yields.

CASE STUDY 2:

Data Annotation and Quality Control

Hummingbird Technologies is an agriculture technology (AgTech) company that uses imagery from drones and satellites to provide farmers with crop analytics that can help them increase their yields. They originally relied on agronomists, who are experts in soil and crop management, and their own data scientists to perform manual annotations on their datasets. Using an HITL workforce, Hummingbird outsourced its image annotation work and annotation QC to a dedicated CloudFactory team, allowing their own team to focus on accelerating and supporting new model development and improvements.

Refine and Optimize

In the final phase of the model development process, teams need to start thinking about what is needed for their model in production. They are monitoring to detect and resolve model drift and ensure high performance of the AI system. They also are thinking about how to handle automation exceptions.

They may need to gather, annotate, and train the model again to respond to changes in the real-world environment. Humans in the loop can support all of these important steps, monitoring outcomes, helping to maintain a healthy data pipeline, and identifying and resolving exceptions.

A SportsTech company uses machine learning to provide video analysis and coaching tools to sports teams.

CASE STUDY 3:

Validation, Exceptions, and Optimization

A sports technology (SportsTech) company uses machine learning to provide video analysis and coaching tools to sports teams. Player tracking algorithms identify key moments in the games for coaches and athletes. Outsourcing vital tasks allowed the development team more time to train their model and optimize performance, so they could focus on improving user experience.

CloudFactory’s team validated the model in production by ensuring player tracking labeling was accurate. They also handled exceptions by adding manual labels when the model was not able to do it due to, for example, poor video quality or players standing too close to one another. Data analysts also corrected labels with poor player tracking. All of this led to a better experience for users and a reduction in burdensome work for the model development team.

The AI Lifecycle and Human in the Loop

Now that we have outlined the place where a human-in-the-loop workforce can play an important role in the model development process, let’s look at the unique needs presented at each stage of the AI lifecycle. Each stage includes a subsection of the key areas we have covered, building in a cyclical manner.

Proof of Concept

Establishing proof of concept requires an understanding of the problem to be solved and the data required to use machine learning to address it. This typically includes exploratory data analysis, model development, and experimentation by data scientists or machine learning researchers.

In this stage, an HITL workforce should be considered as an option for data acquisition, cleansing, and annotation needs. Some development teams may also lean on an HITL workforce for light QC and model validation.

In the proof of concept stage of the AI lifecycle, people are needed for data acquisition, cleansing, annotation, and sometimes, annotation QC.

In supervised machine learning, labeled training data is used to create a proof-of-concept model. It is likely the model will require improvements before it is deployed to a production system. For example, the development team may discover that a new class of objects is needed in a labeled image dataset to improve model performance or outcomes.

Automation can be helpful in this phase for data collection and labeling. Web scraping tools can be used, for example, to collect data. Auto-labeling can augment the work of humans in the loop to save time and money. However, auto-labeling has limitations, and it’s important to use people to review those outputs.

Keep in mind, machine learning is experimental. AI project teams may try out various features, algorithms, modeling techniques, and parameter configurations to find what works best. The ideal workforce for proof of concept can stay in communication with developers, understands the changing parameters of the project, and is highly adaptable to changes and iterations in process.

CHALLENGES

Challenges teams are likely to encounter at this phase include:

- Data acquisition or gathering

- Data cleansing, annotation, labeling, or augmentation

- Tracking experiments to learn what worked and what didn’t work

- Reproducing what worked

- Reusing code to minimize the need to write new code every time they run an experiment

- Determining what level of data quality is sufficient to achieve desired results

Testing a machine learning system is more complex than typical software development. Testing must move through stages of data validation and model quality evaluation. This, like all phases of the model development process and the AI lifecycle, is iterative and cyclical in nature. As the model is refined, evolved, and improved, there is learning through the process. A human-in-the-loop workforce must be able to adapt to those changes.

Proof of Scale

Now is the time when AI project teams consider how their model will operate in a production environment, where it is consuming unlabeled and unstructured data in real time. Here lies a big hurdle: proof that the model can and will scale - and this includes the feasibility of scaling in a way that is profitable.

In the proof of scale stage of the AI lifecycle, people are needed to perform the earlier tasks, plus model validation and sometimes, automation exception processing.

Here, it’s important to deploy humans in the loop strategically, particularly in handling edge cases and exceptions. In addition to the tools mentioned in the last stage, there will be opportunities to apply automation to scale and operationalize QC.

Furthermore, machine learning requires a multi-step data pipeline to retrain and deploy models. The sheer complexity of that pipeline will require automation of the steps that were manually completed in the experimentation, training, and testing stages. That automation will need to be monitored and maintained by people.

CHALLENGES

Likely challenges at this phase include those from the previous phase and a few new ones, including:

- Decisions about how the model will fit into existing operations and IT infrastructures where it will be deployed

- Determining how often to retrain models

- Identifying where and how to apply automation to data pipelines

- Operationalizing exception handling

- Determining the many ways scale must be applied, including technology, automation, data pipelines, and humans in the loop

The answers to these considerations will determine how people, processes, and technology will be involved in operationalizing the AI solution and how much change the organization will face in the production phase.

Model in Production

Here, AI project teams achieve and sustain production of the machine learning model. One of the biggest challenges is maintaining an integrated system that can be continuously operated in production. Humans in the loop can help in production in all of the ways they helped develop the model, up to this point. When teams work with the same data analysts throughout the full process, they can benefit from the improvements and optimizations HITL teams can help them deploy across the entire model development process.

When it comes to data, machine learning models are more vulnerable to decay than standard software systems because their performance relies on data that is constantly evolving.

Click To TweetWhen ground truth is substantially different from the data that trained the model, it affects model quality.

This happened in the wake of the COVID-19 pandemic. In Spring 2020, lockdowns around the world cancelled events, closed restaurants and workplaces, and decreased automobile traffic. Machine learning models that were trained on pre-pandemic data were not prepared to handle the unpredictable data being generated in real time. People’s weird behavior was breaking AI models.

When a model is in production, people are needed to perform the earlier tasks, plus model pipeline optimization.

In addition to the tools mentioned in the earlier phases, development teams may apply automation to monitor model drift. Even with automation, they will need people to review outcomes and determine if the model technically degraded or if ground truth has changed. They may decide to change or remove some data features. As in the earlier phases, they will need labeled ground-truth data.

CHALLENGES

At this phase, development teams are likely to encounter all of the challenges from the first two phases and decisions about:

- Who should be involved to monitor the AI system

- How to establish continuous improvement and the criteria for sustaining it, considering return on investment each time the model development process is completed

- What kind of manual work is involved to create ground truth on production data and compare it to what the AI is predicting

- How much of the monitoring process can be automated and what is likely to require human intervention

- How to scale the AI with the confidence to detect and protect against model drift

Managing these considerations helps guard against low performance and other risks. The iterative, cyclical nature of this process continues as teams track the online performance of their model, check outcomes for accuracy, and continue to retrain the model when outcomes don’t meet expectations.

To learn more about the humans in the loop across the AI lifecycle, watch these webinars: Data Prep: What Data Scientists Wish You Knew and Building Your Next Machine Learning Data Set.

Best Practices for an HITL Workforce

It is best to consider the workforce early in the AI lifecycle and before model development begins, if possible.

Click To TweetSome workforce traits are particularly important at each phase of the AI lifecycle.

Workforce Traits: Human in the Loop

PHASE 1

Proof of Concept

- Start quick

- Low commitment

- Agile team

- Experimentation

PHASE 2

Proof of Scale

- Managed team

- Scalable team

- Quality at scale

- Tooling services

- Performance Metrics

- Continuous improvement

PHASE 3

Model in Production

- Managed team

- Scalable team

- Quality at scale

- Tooling services

- Performance Metrics

- Continuous improvement

- Process focus

- Data pipeline

- Monitor KPIs

- Exception process

- Fast turnaround

At the proof-of-concept phase, a workforce should be able to start fast and have the agility to iterate the process, as there will be plenty of experimentation. It’s best for workforces to come with low commitment, that is, not lock development teams into a contract with restrictive terms.

During proof of scale, a managed team can provide the iterative abilities that are critical to being able to make continuous improvements. It is important to use an HITL workforce that can provide quality work and can use the tools that will be deployed at scale. Workers must be able to measure performance so quality can be increased over time.

When a model is in production, an HITL workforce should have all of the capabilities required in the earlier phases and should also have the process focus to be able to drive continuous improvement. Monitoring key performance indicators (KPIs) and handling exception processes will be important. The faster the data workforce can operate and produce quality outcomes, the better.

At each phase, development teams will build on and often repeat the work done in the previous phase. An HITL workforce used consistently throughout the process can improve upon what was done before.

Next Steps

Once a team decides that a workforce is the right choice for their project, the next step is to find the right-fit workforce.

To learn how to evaluate workforce options, download The Outsourcers’ Guide to Quality: Tips for Ensuring Quality Data Labels from Outsourced Teams. Ready to talk to a workforce expert? Connect with us.

About CloudFactory

CloudFactory combines people and technology to provide cloud workforce solutions. Our professionally managed and trained teams can process data for machine learning with high accuracy using virtually any tool. We complete millions of tasks a day for innovators including Microsoft, GoSpotCheck, Hummingbird Technologies, Ibotta, and Luminar. We exist to create meaningful work for one million talented people in developing nations, so we can earn, learn, and serve our way to become leaders worth following.

Contact Sales

Fill out this form to speak to our team about how CloudFactory can help you reach your goals.