Unmanned aircraft systems (UAS), commonly referred to as drones, are set to significantly impact our society, especially with the integration of artificial intelligence (AI), computer vision, and machine learning. These advancements enhance drone autonomy, enabling drones to operate intelligently across sectors such as construction, real estate, indoor and outdoor inspections, surveying, site selection, agriculture, and the delivery of consumer goods and medical supplies. By embracing autonomous drone technology, companies can conduct operations in environments that are hazardous, inaccessible, or time-consuming for humans.

For example, AI-enabled sensors and computer vision technology empower autonomous drones to perform touchless surveys and inspections in industries like mining, oil and gas, energy, and insurance. The surveys and inspections are considerably faster while keeping employees safe and organizations compliant with industry standards. As a result, companies adopting drone autonomy witness increased revenues and lower operational and maintenance costs.

The Difference Between Drone Automation and Drone Autonomy

Understanding the distinction between drone automation and drone autonomy, is essential for companies aiming to utilize drone operations. In many ways, the two terms have become interchangeable, but it’s necessary to understand the critical differences if you intend to launch or expand drone operations with the addition of autonomy. Once we clarify the differences, we can dive into the levels of drone autonomy.

Drone Automation

Automated drone processes are commonplace. Consider, for example, when a drone pilot or operator programs a flight path using traditional automation tools such as beacons, waypoints, and geofencing to direct a drone from point A to point B. The drone then flies automatically using an array of sensors, timers, motors, and other electrical components. Although the process can proceed without further user input, a user can step in at any point to tweak the mission or cancel it altogether.

Drone Autonomy

Autonomous drone processes are different in that an autonomous drone can make some decisions without user input. This is possible through AI systems that gather data from sensors, satellites, cameras, and videos and then use that data to make decisions. The drone’s decision-making process isn’t confined to an algorithm. Instead, an autonomous drone can learn from its environment and adapt to changing situations.

Most drones operate with combined levels of automation and autonomy, with a recent focus on developing reliable and safe detect and avoid, detect and navigate, and emergency landing technology. These AI applications currently help drones avoid collisions and locate safe landing areas in real-time and will continue to play a big part once FAA regulations allow for Beyond Visual Line of Sight (BVLOS) flights at scale.

For example, Sharper Shape uses a combination of automation and drone autonomy to provide a holistic asset intelligence solution to improve the operation, reliability, and safety of critical infrastructure. Automated flight paths combined with autonomy-based LiDAR and other sensors provide a faster, safer, more accurate, and cost-effective data collection solution.

Levels of Drone Autonomy

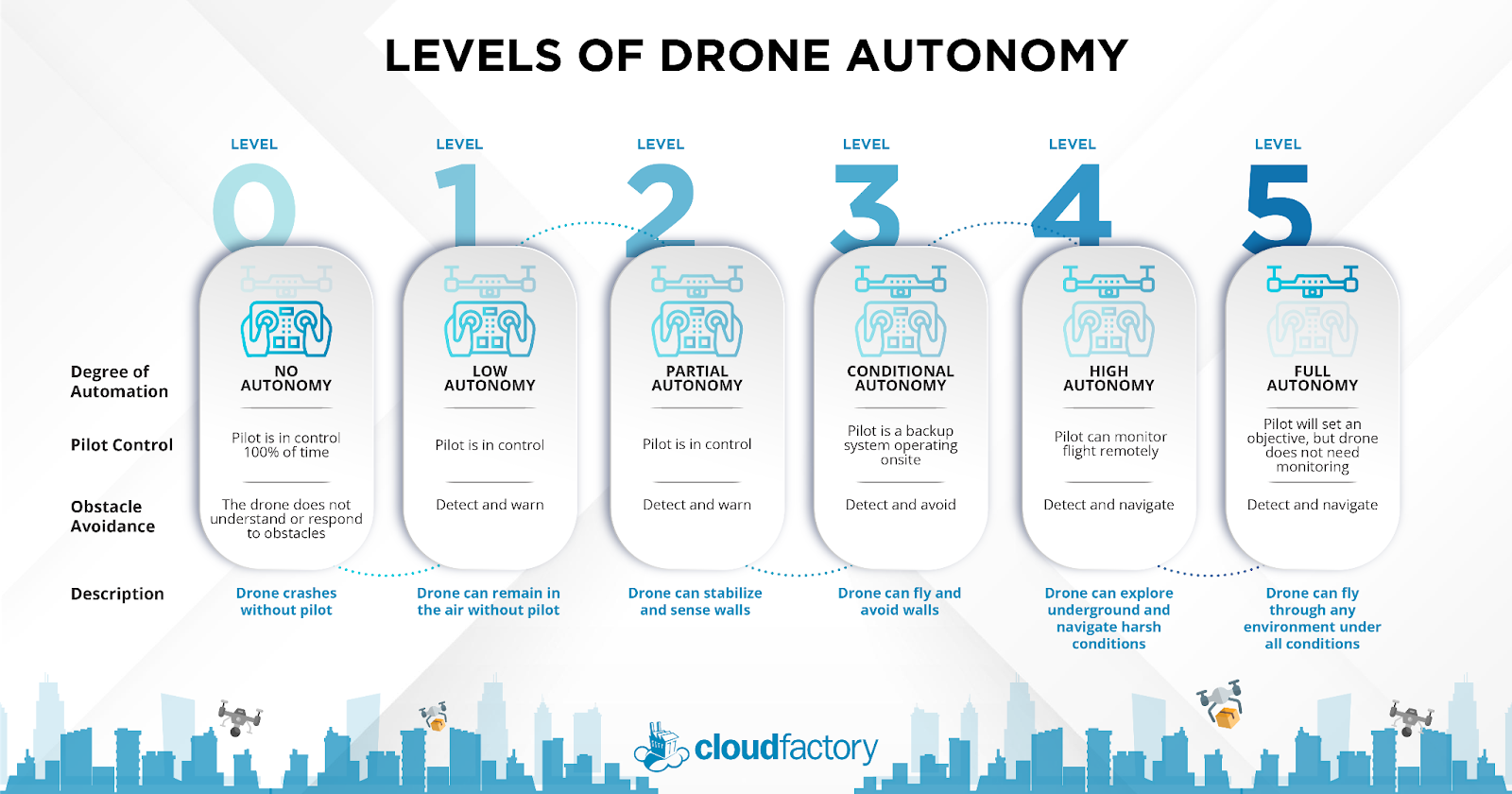

Similar to what’s been done to classify the levels of autonomy in autonomous vehicles, efforts are ongoing in the customer, academic, and industrial communities to define an approach to determine the levels of drone autonomy. As the race to add more autonomy to drones continues with or without an accepted classification, we propose a framework you can use to conceptualize and operationally define levels of drone autonomy. We describe the levels as ranging from 0 (no autonomy) to 5 (full autonomy). The key is to understand these different levels and what they look like in practice within the drone industry.

The demand for autonomy in unmanned aircraft systems (UAS), commonly referred to as drones, is rapidly increasing. See how CloudFactory defines the levels of drone autonomy and how each level changes for drone pilots and obstacle avoidance. Read explanations on our blog.

Level 0 - No Drone Autonomy

Drones that operate at level 0 autonomy need a pilot in control of the drone 100% of the time. Without a pilot, the drone will crash. Level 0 drones lack the ability to understand or respond to obstacles. They are typically used for recreational purposes such as racing.

Level 1 - Low Drone Autonomy

Level 1 drones operate with low autonomy, which can account for the spatial limitations of an environment, such as walls or ceilings, allowing them to safely operate within an enclosed space. These drones can remain in the air without a pilot but must stay in the pilot’s Visual Line of Sight (VLOS). Level 1 drones are usually toy drones with six-axis gyro sensors to aid in stabilization and sometimes include a “return home” button on the controller, which returns the drone to its launch point when pressed.

Level 2 - Partial Drone Autonomy

In level 2 drones, autonomous systems are more advanced, but the pilot is still in complete control. Although these drones use a combination of sensors, accelerometers, and GPS receivers, a pilot still operates its movements and receives warnings when the drone approaches an obstacle so the pilot can steer it to safety. Level 2 drones cannot detect and avoid or navigate, but they can detect and warn. Like level 0 and level 1 drones, level 2 drones and operate in the pilot’s VLOS.

Level 3 - Conditional Drone Autonomy

Level 3 drones operate with a high level of autonomy in that the pilot is no longer flying the drone but is onsite as a backup in case of an emergency. Most drone delivery operators currently function at a level 3 autonomy, where pilots are in place only for emergencies. These drones are equipped with basic detect and avoid systems that use radio and frequency sensors, which help them avoid obstacles such as buildings or poles in the flight path. Level 3 drones can also find safe spaces to land autonomously based on their awareness of the environment.

It’s important to note that level 3 drones in the drone delivery market face regulatory hurdles driven by existing aviation laws that focus on a pilot in the cockpit of an aircraft making decisions. Some commercial delivery drones operate under a Part 107 waiver from the FAA, but there are strict limitations to ensure safety. For example, under Part 107, drones may not fly BLVOS, they may not fly over people or roads, humans must be present on-site, and one pilot can operate only one drone at a time.

Level 4 - High Drone Autonomy

Level 4 drones have a high level of autonomy in that they use advanced detect and avoid systems and detect and navigate systems without pilot action. They can freely explore GPS-free and GPS-denied environments, navigate harsh conditions, and identify people in need without needing a pilot on-site, as pilots can monitor level 4 drones remotely. As FAA regulations loosen, one pilot will be able to manage many BVLOS drones simultaneously. For example, Zipline has scaled up to 24 drones in one fleet under the command of a single operator in Africa. Eventually, an unmanned traffic management system (UTM) will manage drone operations rather than pilots.

Exyn’s drones use GPS-free navigation to explore complex spaces without a pilot as a backup freely. These drones build maps of their surroundings while tracking their movement through the environment, leading to self-reliant drones exploring independently without human interaction during flight. This is a significant step up from the level 3 drones, which need a human operator or driver to be present to take control of the system at any time.

Keep in mind, Exyn’s drones reached level 4 autonomy by exploring human-free environments such as mines and caves; they are not operating above roads or humans, so regulations are not as strict. Drone delivery operators, on the other hand, will need BVLOS flight clearance and go through the Part 135 certification process at the FAA to operate drones over people and roads. While this is no easy task, AI and computer vision will address these hurdles allowing for safe and predictable drone operations.

Level 5 - Full Drone Autonomy

Level 5 drones are a long way off, but they will fly through any environment in all conditions. These drones will be able to control themselves under all circumstances with no expectation of human intervention. To reach level 5 drone autonomy, the navigation performance must be comparable to when drones rely on external systems. There are currently no level 5 drones in production due to a developing regulatory base, the need for advanced systems to manage various unmanned and human-crewed vehicles in the air at one time, and the legwork required to handle the massive volume of data the drone will capture.

As the autonomous drone market continues to grow at breakneck speed, AI companies are under increasing pressure to develop, test, and market solutions to make drones safer and more reliable. When it comes to drones, there’s no room for error, as accidents could cause severe injury or even death to animals or humans. The addition of AI and computer vision will play a big role in addressing these issues. It’s only a matter of time before drones are in the air safely, regulatory compliant, and ready to scale.

CloudFactory is helping drive innovation across the drone sector by labeling the massive amounts of individual objects in images and videos to train machine learning and computer vision models to interpret the world around them accurately. Learn more about AI and drones here.

Video Annotation Data Labeling Computer Vision AI & Machine Learning Drones

.png?width=1563&height=1563&name=Untitled%20design%20(38).png)

.png?width=1563&height=1563&name=Untitled%20design%20(30).png)

.png?width=1563&height=1563&name=Untitled%20design%20(33).png)

.png?width=1563&height=1563&name=Untitled%20design%20(34).png)