Computer vision trains machines to interpret and understand the visual world. In essence, it makes it possible for machines to “see,” bringing to life some of the world’s most innovative technology. Use cases that leverage visual data to train neural networks are growing - from smartphone applications that identify animals to contactless food delivery and precision farming. Computer vision holds great promise for organizations around the world to introduce innovative solutions and disrupt entire industries.

However, the promise and challenge of computer vision are the same—massive amounts of visual data must be accurately labeled to train effective AI models. The success of a computer vision project depends not only on high-quality data but also on the workforce that annotates it.

We’ve created this guide to be a handy reference about computer vision applications, data quality, and the workforce.

Want to stay ahead in AI? Bookmark this guide and revisit it as you refine your computer vision strategy.

Read the full guide below, or download a PDF version of the guide you can reference later.

In this guide, we’ll cover computer vision using supervised learning.

First, we’ll explain computer vision in greater detail, introducing you to key terms and concepts. Next, we’ll explore common computer vision applications in the real world. We’ll also cover data quality, including the kind of data used to create computer vision and the importance of data quality for your models.

Finally, we’ll share why decisions about your workforce choice may determine the success of your computer vision project. We’ll give you considerations for selecting the right workforce and share best practices for the workforce that prepares your data for machine learning.

Introduction:

Will this guide be helpful to me?

This guide will be helpful to you if:

- You are determining if computer vision is the right technique to solve a problem, innovate a product, or provide a service.

- You are getting started on a computer vision project and want to learn more about how data annotation quality can affect your AI model’s performance.

- You want to learn about best practices for data quality when you are building computer vision models.

The Basics:

Computer Vision and Visual Data

What is computer vision?

Computer vision is a form of artificial intelligence (AI) that trains machines to interpret and understand the visual world. Using visual data from the real world, machines can be taught to accurately identify and classify objects, and make a decision or take some action based on what they “see.”

We interact with computer vision every day—often without realizing it. From shopping in retail stores to using touchless delivery services, or even eating an apple grown with AI-powered precision farming, this technology is all around us.

Some applications put the power of computer vision in our hands. When you use your smartphone to scan a retail receipt to, for example, get a reimbursement or a refund, optical character recognition (OCR) can be used to transcribe the text on the receipt to automatically approve or reject your request. The free Seek app, by iNaturalist, allows you to use computer vision to identify plants, animals, and insects - simply by pointing your device’s camera at the object of interest.

The U.S. National Aeronautics and Space Administration’s (NASA) free Globe Observer app invites you to make and submit your environmental observations about trees, clouds, mosquitoes, and land cover. NASA uses these images to enrich its satellite observations to help scientists who study Earth and our global environment.

What kind of data is used for computer vision?

Images, multi-frame images (i.e., video) and sensor data (i.e., satellite) can be labeled to train and refresh machine learning models for computer vision. The most common types of data used to train computer vision models are:

- Two-dimensional (2-D) images and video (multi-frame) from cameras or other imaging technology, such as: SLR (single lens reflex) camera, thermal (infrared) camera, optical microscope, or hyperspectral imaging (HSI) device

- Three-dimensional (3-D) images and video (multi-frame), including data from cameras, scanners, or other imaging technology, such as electron, ion, or scanning probe microscopes

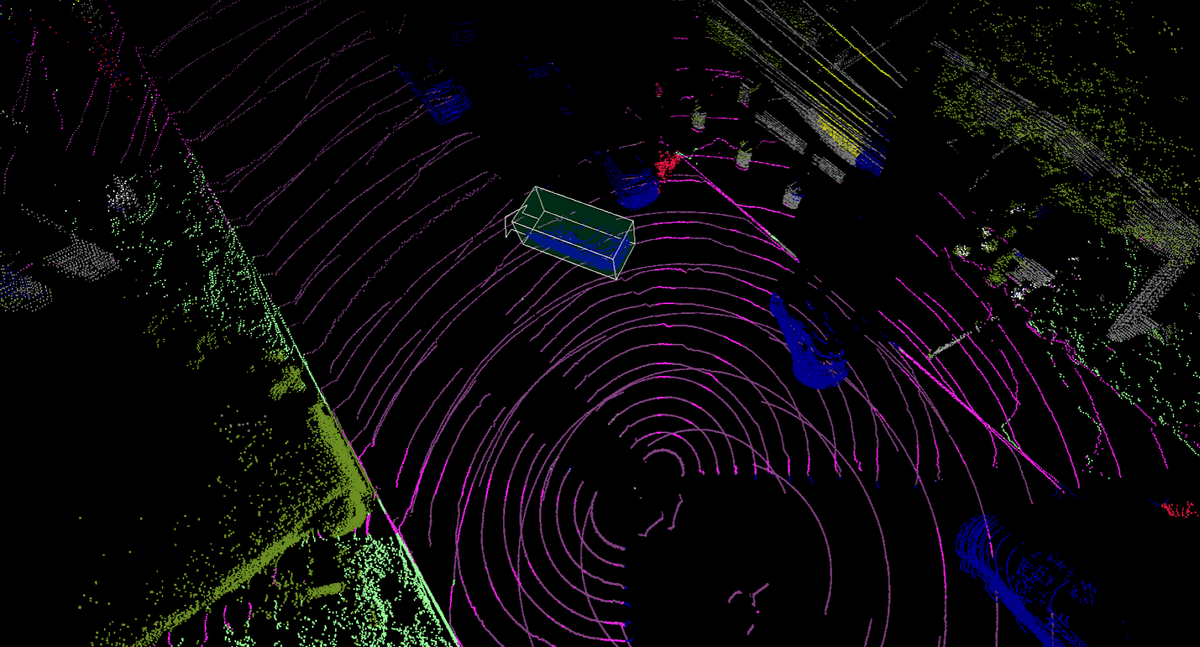

- Sensor data captured with remote technology, such as satellite, RADAR (Radio Detection and Ranging System), LiDAR (Light Detection and Ranging), or SAR (Synthetic Aperture Radar). A point cloud is an example of sensor data.

In supervised learning, data is annotated, or labeled, to teach the machine to recognize the objects it is designed to detect. In unsupervised learning, unlabeled data is used to find patterns in the data. There are hybrid machine learning models that allow you to use a combination of supervised and unsupervised learning.

How is data annotated for computer vision?

You can annotate images using data annotation tools you build yourself. Or, you can use commercially available, open source, or freeware tools. Since computer vision involves processing large volumes of data, a trained workforce is often essential for accurate annotation.

Data annotation tools provide feature sets with various combinations of capabilities, which can be used by your workforce to annotate images or multi-frame images. Video can be annotated as a stream or frame by frame.

What annotation techniques are used in computer vision?

Annotating visual data for computer vision is called image annotation. Here are nine of the most common image annotation techniques for computer vision:

- Bounding box - This is used to draw a box around a target object in visual data. Bounding boxes can be 2-D or 3-D.

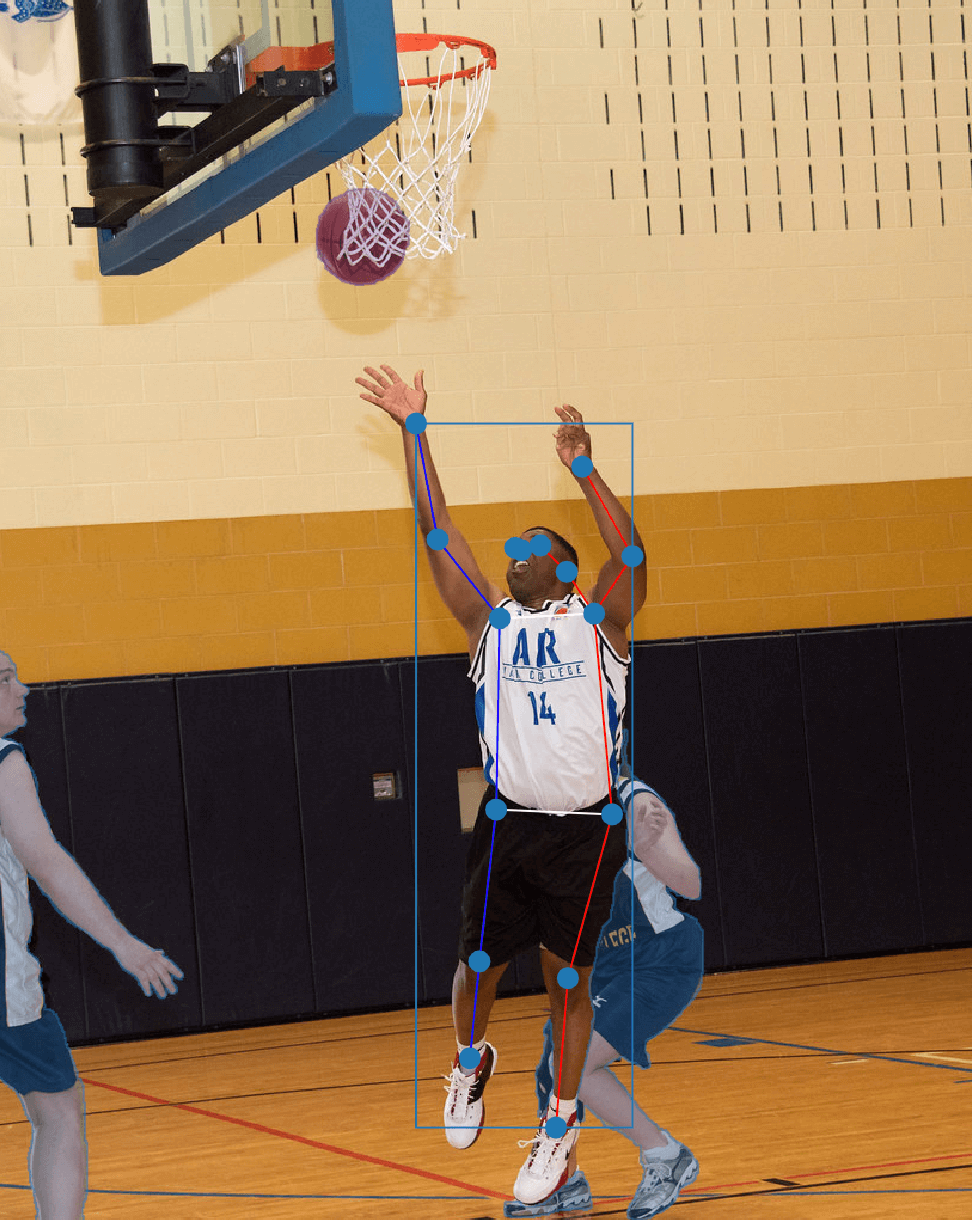

- Landmarking (key point annotation) - This is used to plot characteristics in the data, such as eyes and nose in an image used for facial recognition.

- Wireframe - This is a more complex version of landmarking that is used to annotate geometric features, straight lines, and their intersections to assemble 3-D structures within a scene.

This image depicts a keypoint schema of straight lines, occlusions, and intersections for identifying a basketball player's position within space. Source: CloudFactory using its internal data annotation tool.

- Masking - This applies semantic or instance segmentation to conceal areas in an image and reveal other areas of interest. Image masking makes it easier to focus on certain areas of an image over other areas.

- 3-D cuboids - This refers to the use of 3-D bounding boxes to annotate and/or measure many points on an external surface of an object. These typically are generated using 3-D laser scanners, RADAR sensors, and LiDAR sensors.

This is an example of a point cloud that is annotated for computer vision using a 3-D cuboid around the target object. Source: UnderstandAI using Pointillism, its data annotation tool.

- Polygon - This is used to annotate the highest vertices, or points, of the target object to reveal its edges. Polygons are used when objects are irregular in shape, such as homes, areas of land, or topographical details.

- Polyline - This is used to plot lines composed of one or more segments and are used when working with open shapes, such as road lane markers, sidewalks, or power lines.

- Object tracking - This is used to label and track an object’s movement across more than one frame of video.

This is an example of image annotation for computer vision using tracking. The truck is the object of interest, and its movement spans multiple frames of video.

- Transcription - This is used to capture and label the text that occurs in images or video. This annotation can be done manually, or it can be automated. Optical character recognition (OCR) is an automated form of transcription that can identify letters and numbers in documents, such as purchase receipts.

What is a computer vision algorithm?

A computer vision algorithm is a process with a finite set of well defined instructions that a machine can use to learn how to understand and interpret the visual world. More simply, a computer vision algorithm is like a recipe for how to bake cookies. It’s the set of rules for how the machine should recognize objects of interest and, sometimes, take some action as a result of what it interprets.

Have questions about implementing computer vision? Our team is here to help — contact us to discuss your AI goals.

Common Applications of Computer Vision

Applications of computer vision are growing, as there are:

- Daily opportunities to generate more data from cameras, sensors, and scanners

- Technological advancements in image-capturing hardware and software

- Increases in computer processing speeds

- Growing annotation tool options available to annotate, or label, data

- Better techniques and algorithms for computer vision, such as convolutional neural networks (CNNs or ConvNets)

- More available, affordable imaging devices (e.g., smartphones, sensors), data storage (e.g., cloud, container), and data annotation tools (e.g., open source software)

Here are examples of common applications of computer vision from select industries:

Agriculture: enhancing crop management

Technology holds the promise of solving many of the challenges and inefficiencies in the global production and distribution of food. The digital transformation is predicted to impact every stage of the value chain for agriculture, an industry that is centuries old. The digitization of the food and agriculture sectors can strengthen economic, nutritional, and environmental outcomes around the world.

Agriculture technology, also known as AgTech or farmtech, is a growing discipline that applies technology to increase the profitability, efficiency, and sustainability of farms and farming practices. It uses computer vision and machine learning to help farmers make more precise decisions, replacing instinct and experience-based decisions with data-driven predictive models for improved accuracy and control.

Farmers use GPS (Global Positioning Systems), IoT (Internet of things) devices, sensors, drones, and autonomous vehicles to capture visual data about everything from growing and harvesting to transport and distribution. Much of the visual data these systems analyze is unstructured and can be annotated to train and deploy a computer vision system.

One AgTech application is precision farming, where technology is used to improve production and reduce waste. For example, computer vision models can learn from annotated images to automate stand counts, predict crop yields, and analyze plant health to determine optimal levels and precise areas to apply fertilizer, herbicides, and seeding. Hummingbird Technologies uses drone and satellite imagery to create computer vision systems that help farmers increase crop yields and farm more sustainably.

Other computer vision applications in AgTech include the optimization of farm staffing by predicting the best time to harvest. It can even power the robotic harvesting technology to do the work. Pasture management is another. Rezatec, a geospatial AI company, uses satellite data in computer vision for pasture management, to optimize the grazing performance of sheep, cattle, and other livestock.

Healthcare: detecting data anomalies

In healthcare, the provision of care is costly, and the quality of care a patient receives can mean the difference between life or death. Since medical imagery became available at the start of the 20th century with the discovery of the X-ray, most visual medical data had to be analyzed by a person with medical expertise.

Today, a large portion of medical visual data comes from imaging technology, such as CT (Computed Tomography) or MRI (Magnetic Resonance Imaging) scanning systems. In an industry where this kind of data is ever-growing, computer vision systems offer promise for analyzing medical images quickly to support healthcare professionals in making faster and more accurate decisions.

Computer vision can analyze large amounts of patient data to detect anomalies and patterns faster than people can. For example, it can be used to identify cancerous tumors from CT scan images and diagnose lung cancer more successfully than radiologists.

It also can be used to power medical AI systems that can alert healthcare practitioners about patient risks and help them diagnose conditions earlier. For example, medical AI is being used to enhance medical professionals’ understanding of health issues and optimize preventive care. It’s being used to annotate X-ray datasets to aid in COVID-19 research.

Want to learn more about AI’s role in medical imaging and diagnostics? Check out CloudFactory's Medical AI Guide for insights on improving data quality and accelerating AI development in healthcare.

Security: advanced threat detection

Security is among the most common applications of computer vision. Security can be applied across many contexts, including device protection, theft identification in retail environments, and violence detection in crowded public spaces, among many others. We use computer vision to safeguard our smartphones and tablets that are equipped with facial recognition to unlock them for our use. In China, some retailers use facial-recognition payment technology, so consumers don’t have to use cash or payment cards.

Computer vision can be used to scan live or recorded video footage to provide security officers with vital information, such as detecting guns in a public area. Facial recognition for this use case - to identify harmful or criminal activity - has come under scrutiny for its use by law enforcement to identify suspects of crimes. The algorithms that underpin this technology have flagged innocent people as suspects of crimes, when algorithms falsely matched their photos with security video footage.

For this reason, several large technology companies announced they have stopped offering, developing, or researching facial analysis software. In general, the tech and security industries have much to learn about how to avoid algorithmic bias and false positives, particularly in cases where data is used to make decisions about individuals’ future freedoms.

Transportation: autonomous vehicles

For centuries, transportation has applied technology to deliver people, goods, and services to places quickly and efficiently. Today, the industry is in the midst of a wide-ranging digital transformation, driven in part by computer vision. Applying AI is challenging for organizations at every stage of growth, from startup to enterprise, typically due to the shortage of skills to design, annotate data for, and validate new AI-driven ways to solve age-old, painful problems.

We’re seeing growth in computer vision for transportation with the proliferation of autonomous vehicle capabilities, such as intelligent automobile features like emergency braking and lane detection, Tesla’s hands-free driver assist, and autonomous robots for contactless delivery of food and medicine.

The potential benefits of computer vision in transportation are compelling and virtually endless:

- Safer vehicles with fewer accidents and reduced congestion on streets and highways

- Reduced pollution from gridlocked roadways

- Autonomous delivery of food and medicine to at-risk populations in developing nations

- More efficient and predictable public transportation, including railways, subways, and buses

- Greater safety in the transportation of flammable materials via pipelines

Transportation is among the most promising and visible applications of computer vision. However, the promise of autonomous vehicles has not been realized as fast as some had hoped, due to the intensive data preparation and software and technology development requirements to ensure safety. We can expect to see computer vision contribute to significant advancements in transportation for many years to come.

Curious about how AI is transforming industries? Explore our client stories for more real-world computer vision applications.

Challenges in Computer Vision: Data Quality & Accuracy

Quality training data is crucial in designing computer vision models. After all, these models are used to guide equipment that, for example, can suture patients’ incisions during surgery or navigate busy streets to provide driverless transportation. Quality and consistency across your datasets is crucial to creating high-performance computer vision models.

And, low-quality annotated data can backfire twice: first during computer vision model training and again when your model uses the annotated data to make future predictions. Even after a model is in production, machine learning requires periodic retraining to take into account real-world changes in the environment where the model is deployed. To build, test, and maintain production for high-performing computer vision models, you must train them using trusted, reliable data.

And we’re talking about vast amounts of data. Computer vision requires millions of images - to train, validate, and test a computer vision model. And while there is more data available every day, that data must be carefully and accurately annotated to be useful in supervised machine learning and deep learning.

The challenge for AI developers is transforming that massive, raw data into large amounts of structured data that can be used to train models. Video annotation is especially labor-intensive. Each hour of data collected takes almost 800 human hours to annotate.

The visual data used for computer vision is not only big data; it’s multimodal data, with high variety across different imaging technology. Some use cases require multiple types of data to perform properly, and that requires an exponentially higher amount of work and effort to annotate.

For example, autonomous driving systems can require image, video, 3-D point cloud, and/or sensor data. It takes teams of hundreds of people using advanced software to transform that raw data into sequences and label it, sometimes frame by frame. The same is true with healthcare, where different imaging technology is used, from X-rays to CT scans to MRI.

Data quality is everything in AI. Let’s chat about how you can improve your training data for better computer vision models.

The Solution:

People, Process, and Technology

Annotating data for computer vision models requires a strategic combination of people, process, and technology. Here are considerations and best practices for each one.

People: selecting the right workforce

Choosing the right people to annotate, or label, your data for computer vision is one of the most important decisions you will make. Computer vision and other machine learning models are only as good as the data they are trained on, which means you will need people, often referred to as “humans in the loop,” to prepare and quality-check your image annotations.

You generally have five options for your data labeling workforce:

- Employees - They are people who are on your payroll. Their job description may not include data annotation.

- Contractors - These are temporary workers (e.g., freelance, gig workers). They may work remotely or at your location.

- Managed outsourced teams - These are professionally managed teams of annotators who can transition to remote work (e.g., CloudFactory). With this model, you have direct access to workers.

- Business process outsourcing (BPO) - This is a traditional outsourcing option where a third party hires workers and places them in offices to get the work done. BPOs do not give you access to the people who are doing the work.

- Crowdsourcing - These are anonymous workers sourced using third-party platforms.

Basic domain knowledge and an understanding of your business rules is essential for your workforce to create high quality, annotated datasets for machine learning. Workers label data with higher quality when they have context, or know about the relevance of the data they are annotating to the problem you are solving. It’s also important that your workforce can train new annotators on your rules related to context and edge cases.

To achieve this, you will need direct communication with your annotation team. Reliable communication and collaboration with your project team will ensure your annotators can share what they are learning as they work with your data. You can use their insights to adjust your approach when it’s helpful to do so.

Process: optimizing data annotation

Designing a computer vision model is an iterative process. Data annotation evolves as you train, validate, and test your models. Along the way, you’ll learn from their outcomes, so you’ll need to prepare new datasets to improve your algorithm’s results.

Your data annotation team should be agile. That is, they must be able to incorporate changes in your annotation rules and data features. They also should be able to adjust their work as data volume, task complexity, and task duration change over time.

Your team of data annotators can provide valuable insights about data features - that is, the properties, characteristics, or classifications - that you want to analyze for patterns that will help train the machine to predict the outcome you are targeting.

Technology: choosing the right tools

Data annotation tools are software solutions that can be cloud-based, on-premise, or containerized. You can use them to annotate pipelines of production-grade training data for machine learning. You can decide to take a do-it-yourself approach and build your own tool, or you can choose one of the many data annotation tools available via open source or freeware.

Another option is to choose a data annotation tool that is available commercially, for lease or purchase. There are a growing number of commercial data annotation tools available that are full-featured, complete-workflow commercial tools you can use for data annotation.

The challenge is deciding how to select the right tool for your computer vision project. Your first choice will be a critical one: build vs. buy. You’ll also have at least six important data annotation tool features to consider: dataset management, annotation methods, data quality control, workforce management, security, and integrated labeling capabilities.

Another important aspect of tool selection is workforce. Whether you use employees or contractors, crowdsourcing, or an outsourcing provider, your workforce will need access to and training on your data annotation tool. Computer vision algorithms must consume vast pipelines of visual data to train, validate, and test your models. Both how your workforce accesses the data to be annotated and how they interact with the annotation tool you choose will have an important impact on the success of your project.

The CloudFactory Approach to Computer Vision

At CloudFactory, we have a decade of experience professionally managing data annotation teams for organizations around the world that are developing computer vision solutions. To every project, we bring:

People Who Deliver Quality at Scale

Our team members are known, vetted, and professionally managed, so their domain knowledge and proficiency with your rules and process improve over time. We track and measure quality, and we can add quality assurance (QA) to ensure high accuracy and manage exceptions. We put you in direct communication with a team leader, who works alongside the annotation team and creates a closed feedback loop to support communication with you.

Agile Process

We’ve worked with many of the world’s top autonomous vehicle companies, so we know about quality data for computer vision. We bring a decade of experience to your project, and we know how to design workflows that are built for scale.

We have experience with a wide variety of tasks and use cases and a deep understanding of workforce training and management for data annotation. We can transform your successful process with just a few or as many as thousands of workers. Our team approach ensures task iterations are managed quickly and efficiently.

Tool Agnostic

We don’t lock you into using a data annotation tool that we provide. We’re tool-agnostic, so we can work with any tool on the planet, even the ones you build yourself. We maintain partnerships with the top data annotation tool providers and can recommend best-fit tools for your use case.

Get in touch - Let’s discuss how we can support your computer vision project with a professionally managed workforce.

We’re on a mission.

At CloudFactory, our guiding mission is to create work for one million people in the developing world. We provide workers training, leadership, and personal development opportunities, including participation in community service projects. As workers expand and grow their experiences, their confidence, work ethic, skills, and upward mobility increase, too. Our clients and their teams are an important part of our mission.

Are you ready to learn how you can scale data annotation for computer vision with a professionally managed workforce? Find out how we can help you.

Contact Sales

Fill out this form to speak to our team about how CloudFactory can help you reach your goals.

Frequently Asked Questions

What is computer vision?

Computer vision is a form of artificial intelligence (AI) that trains machines to interpret and understand the visual world. Using visual data from the real world, machines can be taught to accurately identify and classify objects, and make a decision or take some action based on what they “see.”

In supervised learning, humans are in the loop. They annotate, or label, visual data that can be used to teach the machine to recognize, and sometimes track, the objects it is designed to detect. In unsupervised learning, unlabeled data is used to find patterns in the data.

What are algorithms and applications of computer vision?

Convolutional neural networks (CNNs or ConvNets) are commonly used in deep learning for computer vision, along with other algorithms. Common applications of computer vision include AgTech (or farmtech) to optimize food production and distribution, medical AI for detecting disease, device security, and autonomous vehicles.

One of the most cited references on this topic is a book, Computer Vision Algorithms and Applications, written by Richard Szeliski, based on his lectures at the University of Washington and Stanford University. While the first version is dated 2010, it provides an excellent resource for foundational knowledge about algorithms and applications of computer vision.

What is deep learning for computer vision?

Deep learning is a more complex form of machine learning, and both are forms of artificial intelligence (AI). Deep learning with supervised learning uses annotated visual data to train machines to interpret and understand the visual world and make decisions or take action based on what they “see.”

Deep learning uses convolutional neural networks (CNNs or ConvNets) and other techniques to perform various computer vision tasks, such as object detection, facial recognition, action and activity recognition, and human-pose estimation.

What is a computer vision algorithm?

A computer vision algorithm is a process with well defined instructions that a machine can use to learn how to consume visual data to interpret or understand the visual world and take some action as a result of what it “sees.” More simply, a computer vision algorithm is like a recipe for how to bake cookies. It’s the set of rules for how the machine should recognize objects of interest and, sometimes, take some action as a result of what it sees in the visual data it analyzes.

What are some computer vision applications?

There are many applications of computer vision. Some common applications include:

- Precision agriculture applies technology to increase the profitability, efficiency, and sustainability of farms and farming practices. It uses computer vision to replace farmers’ decisions with predictive models that create a more controlled and accurate farming environment.

- Medical AI can analyze large amounts of visual patient data to detect anomalies and patterns faster than people.

- Security, including device protection, theft identification in retail environments, and violence detection in crowded public spaces, can be powered by computer vision.

- Transportation applications of computer vision are growing with increasing autonomous vehicle capabilities, such as emergency braking, lane detection, and self-driving cars.

Where can I find a guide to convolutional neural networks for computer vision?

Recent developments in convolutional neural networks (CNNs or ConvNets) have led to high performance in machine vision tasks and systems. CNNs form the essence of deep learning algorithms in computer vision.

A Guide to Convolutional Neural Networks for Computer Vision is among the most frequently cited textbooks on this topic. The guide is helpful to understand the theory behind CNNs and application of CNNs in computer vision. It provides an introduction to CNNs starting with the essential concepts behind neural networks: training, regularization, and optimization.

What is computer vision technology?

Computer vision technology can refer to more than one thing. It can mean the technology that is used to capture the visual data that is used to create computer vision, such as digital cameras, magnetic resonance imaging (MRI) devices, or LiDAR (Light Detection and Ranging) sensors.

It can refer to the neural networks and software platforms that power computer vision systems. It also can refer to the technology that is created using computer vision, such as emergency braking in automobiles, or computer-vision-enabled robotics that monitor crops to identify the optimal time for harvest.

Where can I learn about Azure for computer vision?

There are many great online learning resources available to learn about Azure for computer vision. Microsoft owns and maintains Azure and offers a free learning path for Azure.

Platform learning is available as well, and it can be quite affordable. Cloud Academy offers this course: Designing Solutions Using Azure Cognitive Solutions. Udacity collaborated with Microsoft to create a nanodegree program and scholarship for students learning about Azure and machine learning. Udemy offers a course called Azure Machine Learning using Cognitive Services.

Is computer vision machine learning?

Creating a computer vision system requires the use of machine learning (ML), and it typically involves deep learning (DL), which is a subset of ML. In essence, computer vision is a form of artificial intelligence (AI) that trains computers to interpret and understand visual information and take action or make a decision based on what they “see.” Computer vision uses photos, videos, and other visual data to train machines to identify, classify, track, and/or react to what they interpret visually.

Is computer vision AI?

Yes; computer vision is a form of artificial intelligence (AI) that makes it possible for machines to consume visual data and take action or make some decision as a result of what it “sees.” AI developers use visual data to train systems to recognize and classify objects they are designed to detect and sometimes, take some prescribed action as a result.